When you do a random Internet search of Artificial Intelligence (AI), you are greeted with images of coding in blue font, robots with white faces, and the silhouette of brains outlined by circuit boards and beaming lights.

However, none of this tells you what exactly AI is. These images also fail to tell you how Latinxs are impacted by AI, or will continue to be impacted.

Latinxs are one of the “minority groups projected to grow its representation in the most dramatic way; from 15% to more than 30% by 2050.” However this is not reflective in the fields of Science and Engineering, with Latinx making “3% of employed doctoral scientists, and 2.8% doctoral engineers.”

AI “makes it possible for machines to learn from experience, adjust to new inputs (data) and perform human-like tasks”. These tasks can be anywhere from self-driving cars, speech recognition, to performing heart surgery and predicting the next financial crisis. What data and how it is interpreted and selected is completely up to the creator, and is implicitly biased.

Researchers out of Stanford co-authored a paper that spoke to this challenge. Through their embedding tool, they discovered that words like “honorable,” has “a closer relationship to the word “man” than to the word “woman.” This same bias, was found across different ethnic groups, including Latinxs (having less association to the word honorable).

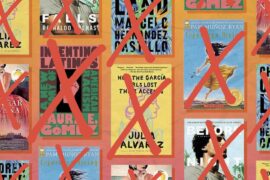

The Latinx community has to have a voice in the programming of algorithms for AI, algorithms that meet our needs and tell our story inclusively. It is the stories that give life to the work that we do, and our story needs to be programmed into all aspect of this country, otherwise it will be deleted.